The C# Reinforcement Learning Revolution - Breaking Free from Python's Grip

“Why build reinforcement learning in C#?”

This question follows me everywhere. It reveals an unspoken assumption in the machine learning world—that serious ML development happens exclusively in Python. But what if this conventional wisdom is holding us back?

My path to creating RLMatrix wasn’t motivated by language preferences or academic interests. It emerged from practical necessity while solving concrete engineering problems. As it turns out, the tools that work beautifully in research environments often fail to meet the demands of production systems.

Real-World Problems Demand Real Solutions

My journey began with microfluidic device design. For my PhD in the deMello group, I needed to optimize complex fluidic structures beyond what manual prototyping could reasonably achieve.

Microfluidic chips require master molds fabricated in cleanroom conditions—each prototype representing hours of meticulous labor.

Creating and testing these devices is prohibitively expensive. Even minor fabrication variations can render an entire design useless. Computational fluid dynamics simulation offered a path forward, but presented a new challenge: connecting CFD outcomes to reinforcement learning systems.

My challenge quickly proved more demanding than toy problems like CartPole. With partially observable dynamics and complex reward structures, I needed to modify standard algorithms substantially.

My project worked with a pipeline containing many external software packages manipulating large amounts of data. Nearly all this software provided robust C# SDKs, making C# the natural choice for orchestrating the overall system. Initially, I tried maintaining Python/MATLAB interop for the reinforcement learning components, but this became increasingly unsustainable as my algorithmic modifications grew.

The Debugging Revolution

This revealed the first critical advantage of unifying development in C#: comprehensive debugging. Consider a manufacturing facility deploying reinforcement learning for robotic control—they’ll inevitably need to adapt published algorithms for their specific context. With RLMatrix, engineers can trace execution through the entire reinforcement learning loop, set breakpoints anywhere, and inspect all variables and tensors.

Traditional approaches make this nearly impossible. ML-Agents introduces a Python/C# translation boundary precisely where visibility is most crucial. Other frameworks offer “black box” algorithms accessed via socket connections, with minimal transparency into internal operations. This approach isn’t just inconvenient—it’s fundamentally inadequate for industrial applications, like hitching modern factory equipment to horse-drawn carriages.

What began as a practical solution revealed transformative capabilities I hadn’t anticipated.

Universal Compatibility

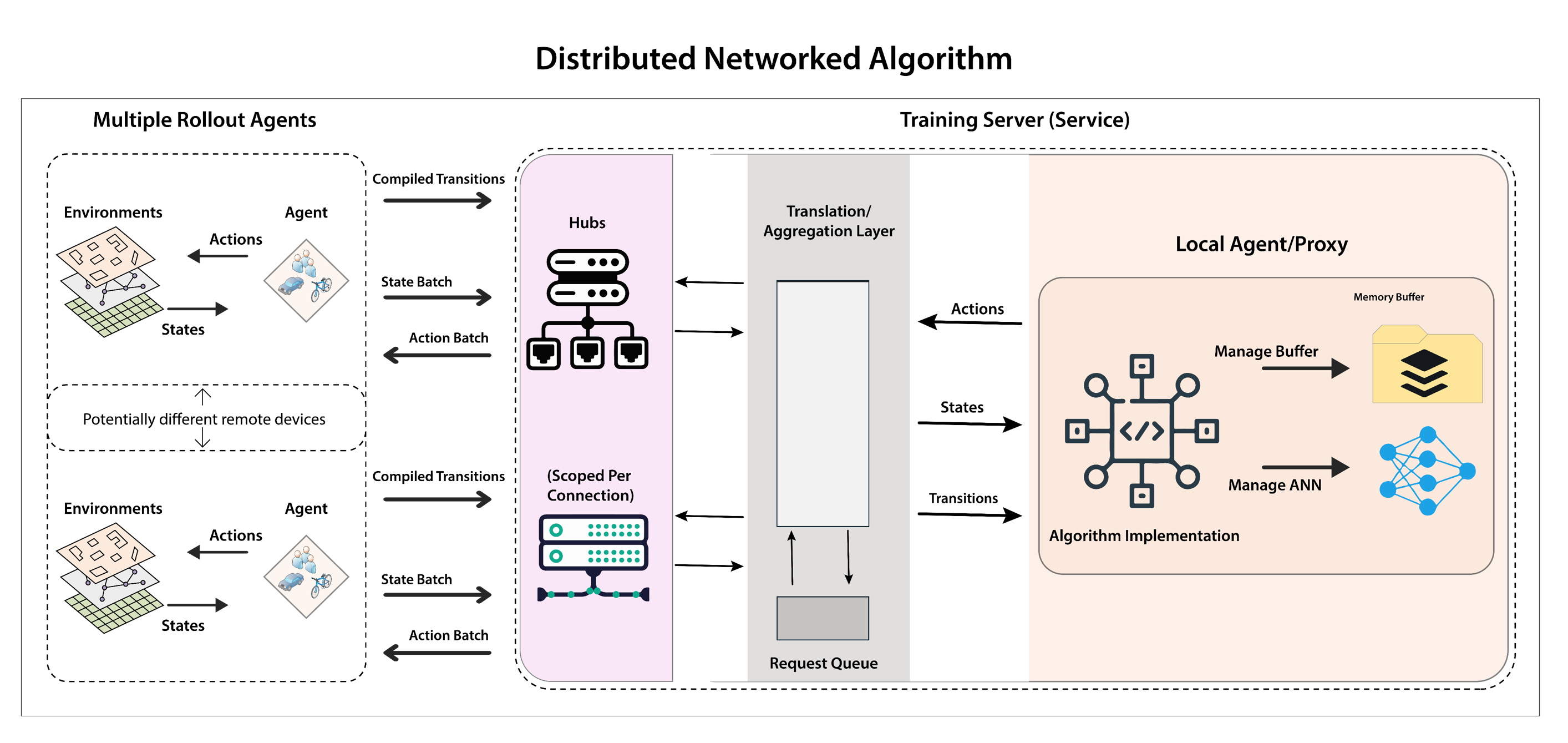

Unlike framework-specific implementations, RLMatrix works with any C# API. With support for both modern .NET and .NET Standard 2.0, it runs everywhere from cloud services to Unity games. More importantly, it enables seamless transitions between development and distributed deployment:

// Local development agentvar agent = new LocalContinuousRolloutAgent<float[]>(optsppo, env);

// Deploy to compute cluster - one line changevar agent = new RemoteContinuousRolloutAgent<float[]>("http://127.0.0.1:5006/rlmatrixhub", optsppo, env);

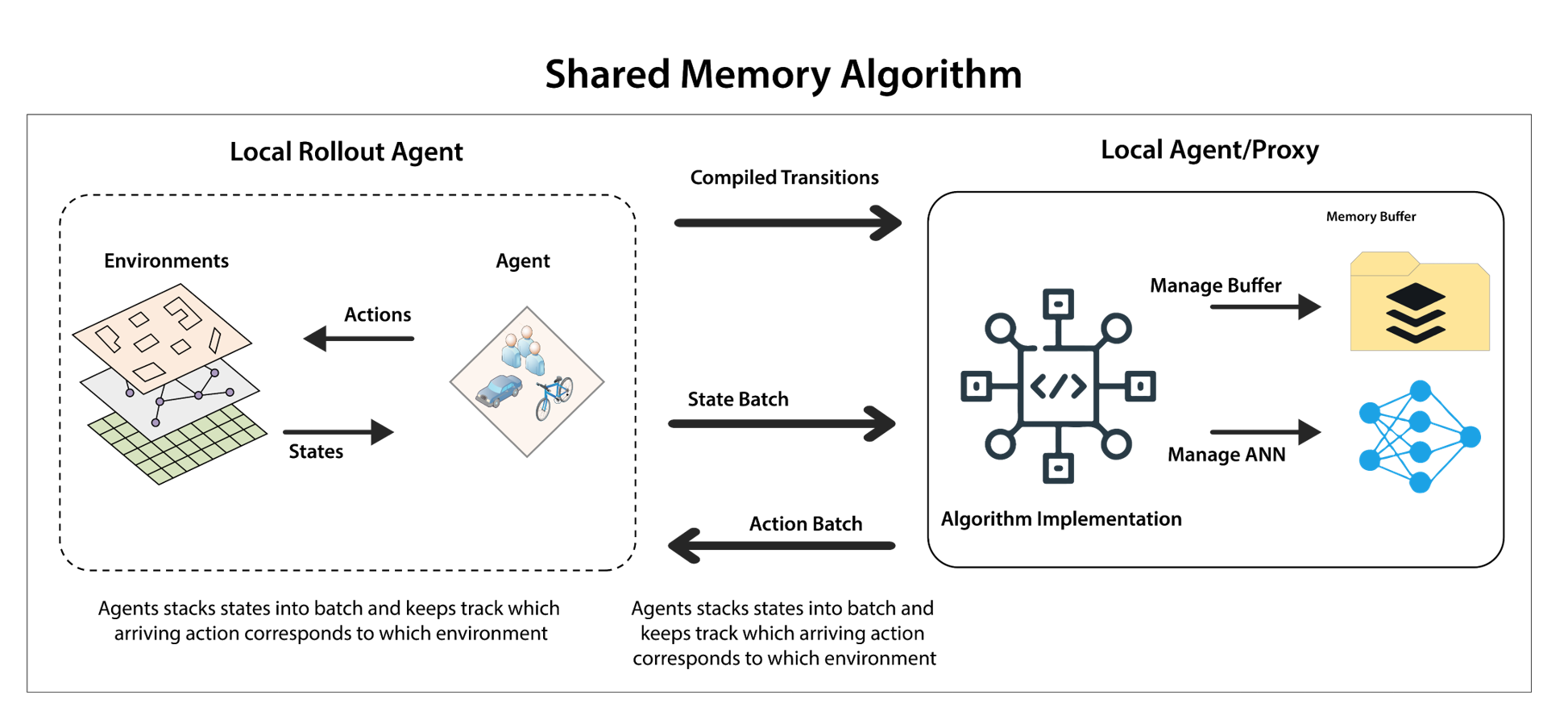

Local training architecture

Distributed training architecture

This isn’t just convenient—it eliminates an entire class of deployment problems. Develop locally, then scale to distributed compute resources without rewriting or refactoring your implementation.

Performance That Matters

The performance characteristics startled me. RLMatrix collects experiences asynchronously while the core engine processes batches, then vectorizes them for parallel GPU execution. This substantially outperforms the traditional approach of processing experiences sequentially.

Python’s fundamental limitation becomes unavoidable in reinforcement learning: it’s excellent at delegating vectorized operations to C++ libraries but painfully slow for everything else. Reinforcement learning involves massive data manipulation outside these optimized paths—precisely where Python falters.

RLMatrix achieved exceptional performance with minimal optimization effort. Basic threading patterns combined with the JIT compiler’s capabilities created a system that dramatically outperforms specialized Python frameworks without sacrificing flexibility.

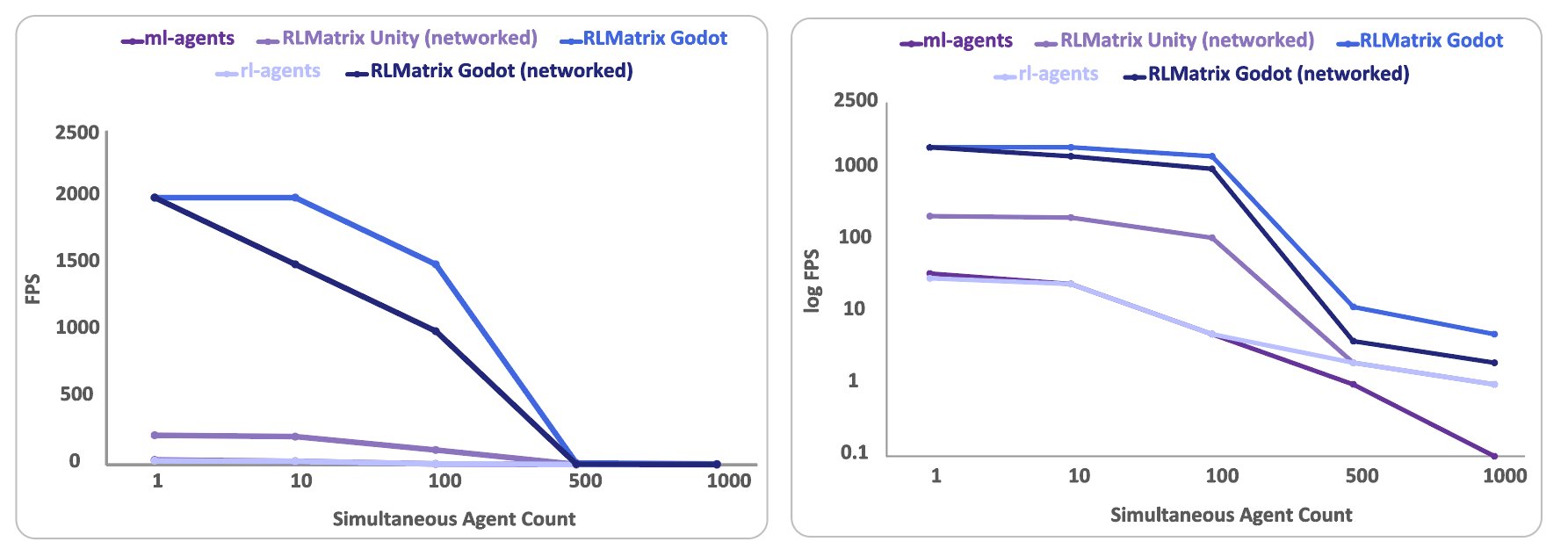

RLMatrix consistently outperforms both ML-Agents and Godot RL Agents across in time-per step in identical environments in real-time.

Type Safety as a Superpower

The type safety advantage becomes crucial in complex environments:

# Python: Dimension errors, type mismatches, and range violations# discovered only at runtime—possibly after hours of trainingdef step(self, actions): for motor, action in zip(self.motors, actions): motor.apply_torque(action)// C#: Constraints verified at compile time// Impossible to pass incorrect shapes, types, or ranges[RLMatrixActionContinuous(-1, 1)]public void ControlJoint1(float torque) { joint1.ApplyTorque(torque);}

[RLMatrixActionContinuous(-1, 1)]public void ControlJoint2(float torque) { joint2.ApplyTorque(torque);}Beyond ML-Agents

Let’s be clear about RLMatrix’s capabilities compared to established solutions like ML-Agents. RLMatrix implements a comprehensive algorithm suite that ML-Agents simply cannot match, including full DQN Rainbow variants with prioritized experience replay, noisy networks, and distributional RL. These aren’t academic curiosities—they’re powerful tools that can mean the difference between success and failure in challenging environments.

More importantly, RLMatrix isn’t limited to a single framework. While ML-Agents serves only Unity, RLMatrix works across the entire .NET ecosystem—from ASP.NET backends to Godot game development to industrial control systems. This universality eliminates specialized knowledge requirements and fragmented implementations across your technology stack.

The team behind ML-Agents includes brilliant engineers—you can read their excellent paper here. Their work represents the best possible outcome within the constraints they accepted. But that’s precisely the problem—they began with a fundamental architectural compromise that no amount of engineering brilliance could overcome.

A solo developer with a superior technical foundation outpaced a specialized team not through extraordinary skill, but by rejecting unnecessary constraints. The lesson is clear: choosing the right foundation matters more than team size or budget.

The Transparency Advantage

When engineers encounter issues with Python-based reinforcement learning systems, they face a bewildering array of abstraction layers. Is the problem in their environment code? The Python RL framework? The C++ numerical libraries? The interop layer? Locating and fixing issues becomes a specialized skill in itself.

RLMatrix eliminates this complexity. Engineers see the complete system—from environment simulation to neural network updates—in a single, consistent language with unified debugging tools. This isn’t just about convenience; it fundamentally changes who can successfully deploy reinforcement learning.

With traditional approaches, companies need specialized ML engineers who understand the entire fragmented stack. With RLMatrix, any competent C# developer can understand, modify, and extend reinforcement learning systems. This democratization turns reinforcement learning from an esoteric specialty into a standard tool in the developer toolkit.

The educational value extends beyond professional developers. Students and researchers can trace algorithm execution step-by-step, building genuine understanding rather than treating components as magical black boxes. This transparency accelerates both learning and innovation.

The Source Generation Revolution

Perhaps the most transformative aspect of RLMatrix is how it reshapes the development workflow through C# source generators. Traditional reinforcement learning development follows a tortuous path:

- Define environment logic

- Manually implement interfaces with boilerplate code

- Handle observation space and action space definitions

- Connect environment to learning algorithm

- Debug interface mismatches when things inevitably break

The RLMatrix Toolkit eliminates steps 2-4 entirely. Simply annotate your domain code with attributes:

[RLMatrixEnvironment]public partial class IndustrialController{ [RLMatrixObservation] public float GetTemperature() => sensor.CurrentTemperature;

[RLMatrixActionContinuous(-100, 100)] public void SetHeatingPower(float power) { heater.ApplyPower(power); }

[RLMatrixReward] public float CalculateEfficiency() { return MeasureProcessEfficiency(); }}The source generator automatically produces all necessary connecting code, with compile-time verification of your entire reinforcement learning pipeline. This isn’t just less code—it’s a fundamentally different approach to the problem that keeps you focused on domain logic rather than RL infrastructure.

Python persists in machine learning not because of technical merit, but through ecosystem inertia and academic tradition. Its limitations are increasingly evident as reinforcement learning moves from research papers to production systems. The dynamically-typed, interpreter-dependent approach makes sense for quick prototyping but becomes actively harmful when reliability and performance matter.

C# provides exactly what production reinforcement learning demands: performance approaching C++, type safety that catches errors before deployment, consistent debugging tools, and modern language features that boost developer productivity. RLMatrix proves that we can implement state-of-the-art algorithms without drowning in boilerplate or performance hacks.

Join the Revolution

The status quo isn’t sustainable. Organizations are discovering the hard way that Python-based reinforcement learning systems crack under production pressures. They demand specialized knowledge to maintain, resist integration with existing systems, and introduce runtime errors that could have been caught at compile time.

As an independent developer committed to changing this paradigm, I’ve created RLMatrix with a practical dual licensing model. Non-commercial or low income users get full MIT license terms, while commercial applications support continued development. I’ve committed to transitioning the entire project to MIT once licensing agreements and donations reach $300,000 USD—a fraction of what organizations invest in less effective alternatives.

If you’re directing AI initiatives involving reinforcement learning, consider the hidden costs of the Python approach:

- Development time lost to debugging cross-language interfaces

- Performance penalties from interpreted code and GIL limitations

- Deployment complexity when integrating with production systems

- Specialized personnel required for maintenance and modifications

- Runtime errors that could have been prevented at compile time

Production systems deserve tools designed for reliability and performance, not academic prototypes stretched beyond their capabilities. RLMatrix offers a path forward—combining algorithmic sophistication with industrial-strength engineering.

Break free from Python’s grip. Join the RLMatrix revolution.

This manifesto was written by the creator of RLMatrix, advocating for a future where reinforcement learning is accessible, performant, and fully integrated with production software ecosystems.